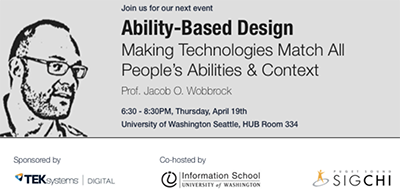

On April 19, 2018, I spoke at the Puget Sound Special Interest Group on Computer-Human Interaction (SIGCHI). Entitled "Ability-Based Design: Making Technologies Match All People’s Abilities and Context," my talk was sponsored by TEK Systems and co‑hosted by Puget Sound SIGCHI and the University of Washington (UW) Information School. Turn-out was the highest it had been in recent memory with about 150 people in attendance. In fact, pizzas had to be rush-ordered when the usual staple of sandwiches ran out!

I described ability-based design, which is an approach that designs for all people and all abilities by focusing on what people can do and by making computing systems accommodate their users, rather than the other way around. For example, if a touch screen could be calibrated to accept more flexible forms of touch, it wouldn't force a user who can't operate their fingers to procure a hand-mounted pointing stick or other technology. On this subject, I shared the project SmartTouch, which makes touch screens capable of modeling and interpreting different forms of touch, even if it is not with a single finger capable of landing and lifting from one spot with no interference from other parts of the hand.

Along with the motivation for, and principles of, ability-based design, I described multiple other research projects my students and I have pursued, including a system for writing by making letter-like gestures with a trackball, an automatic user interface generator informed by the mouse-pointing abilities of users, how to improve the accuracy of a mouse cursor, a voice-controlled painting program, finger-driven screen-reading for making smartphones accessible to blind people, and smartphone text entry based on a Perkins Brailler.

Because ability-based design also focuses on the contexts in which people use technology, we are also pursuing research on how people are using mobile technology. For example, WalkType is a project that makes smartphone keyboards more accurate by modeling the gait of the user, specifically which foot is stepping forward, and corrects for subtle shifts in finger position that can result in missed keys. Other projects can detect the grip with which a user is holding a smartphone, when the user looks away and returns his gaze to the screen, and even the blood alcohol level of the user—all using commodity smartphone sensors. With the ability to sense these things comes the ability to accommodate them in various ways—for example, making interfaces operable with one hand, using on-screen highlights to help direct a user’s attention, or preventing a user’s car from starting when he is inebriated.

All living people have abilities. And accessibility is a concept for everyone. Ability‑based design emphasizes both points, striving to recognize all that people can do in the design of computing systems that better accommodate their users.