In the past couple of years, low-cost eye-tracking devices have been introduced to the consumer market. These are mainly marketed as gaming devices, but they have also found applications in computer access for people with physical disabilities.

Eye-trackers (or gaze-trackers) are devices consisting of infrared LEDs and one or more infrared cameras. By capturing the reflection of the infrared light off of the user's eyes, an image processing algorithm can determine where on a computer monitor the user is currently looking. Historically, these devices have been very expensive, but some now sell for less than $200.

For this article, I will focus on the Tobii Eye Tracker 4C. I have personally used this device for over a year. It retails for about $150 and is marketed to a gaming audience. An adhesive magnet allows the device to be mounted to the lower edge of your computer monitor, and a USB 3.0 connection transmits data to the computer. In order to use all of the available utilities, Windows 8, 8.1, or 10 is required. Some functionality is possible with Windows 7. Tobii has not made similar hardware available with drivers for Mac or Linux. (Other devices on the market have similar technical specifications and will work with much of the same software.)

I have found that the Eye Tracker 4C works best with a moderately-large external monitor, but there is an upper size limit for monitors: either 27 inches or 30 inches depending on aspect ratio. In order for the device to work properly, the basic positioning requirement is that your face is oriented towards the monitor, with your two eyes at the same height. In a traditional monitor set up, this means sitting upright facing the monitor, but a variety of other positions could be achieved with creative monitor mounting.

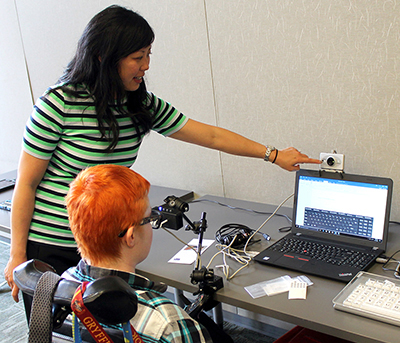

It is possible to use the eye-tracker with a laptop, but targeting will be less accurate. The screen can also become a limiting factor when working on a laptop, as an on-screen keyboard large enough for eye tracking will likely occupy at least half of the screen. A few gaming-focused laptops are available with essentially the same eye-tracking hardware integrated into the laptop itself.

Tobii provides drivers and calibration software, along with a few other utilities. The calibration is quite simple, only requiring the user to stare at a few points on the screen for a few seconds apiece. By default, the software attempts to track both of your eyes, but it is configurable to track only the right or only the left eye.

Most of the auxiliary software provided by Tobii should be considered more of a demo than a useful piece of software, but one feature in particular is worth mentioning. You can configure the software to have the mouse cursor "jump" to the current gaze point when the user starts moving the mouse in that general direction. This can be quite useful if combining eye tracking technology with a physical mouse (or trackball, or joystick, etc.). This configuration allows large mouse movements across the screen to be handled by the eye tracker and small, precise movements to be controlled by a physical mouse. Other options include configuring the cursor to jump when a key is pressed and setting thresholds for the minimum jump distance.

To control the computer entirely with your eyes, additional software is required. A high-quality, free, and open-source option is OptiKey. (Full disclosure: I have contributed to OptiKey and its documentation.) At its core, OptiKey is a tool that allows users to interact with a Windows computer and generate synthesized speech output using eye gaze. It features an on-screen keyboard where you point with your eyes and click either with a switch or by dwelling on a key. Well-designed mouse emulation tools are also included. These include options that allow you to more easily select small targets by zooming in on a screen region. OptiKey also features multiple methods of word prediction and completion from the keyboard and the ability to change common settings without exiting the keyboard environment. It is designed so that, once Optikey is running, you can interact with the computer using only the eye-tracker (and optionally a switch). OptiKey contains keyboards in many languages and even includes a set of pictorial keyboards (designed to allow children and others who struggle with written language to communicate).

It is entirely possible to use OptiKey to write source code, but some of the symbols that are frequently used in code are buried in OptiKey, requiring the user to navigate through multiple sub keyboards before reaching the desired symbol. My amazing friend Maxie has been helping me create and implement some alternative keyboard layouts better suited to coding, and you can find our work (as well as instructions for making your own custom keyboards) on GitHub.

Project Iris is another software package that works with the eye-tracker. It is tailored towards allowing you to craft your own eye-tracking-based interfaces for Windows programs. Its most powerful feature is "interactors," on-screen rectangles that respond when you look at them, triggering keystrokes or other actions. The software is particularly useful when real-time input is important, such as in video games. This software isn't free, but there is a free 14 day trial available.

Dasher is another free and open-source on-screen keyboard, and its design works extremely well for noisy input like eye trackers. To use Dasher, you must first map the eye tracker input to the mouse. This can be done with Project Iris or with a simple FreePIE script (see below). Dasher is very good for fast text entry, but it is not good for editing or navigating user interfaces. I like to describe using Dasher with an eye tracker as "interactive reading." Dasher does have quite a steep learning curve, but at the top of that curve, it really is as easy as reading.

If you want to write your own code to interface with the eye tracker, FreePIE is a great place to start. It lets you write Python scripts that take input from any number of devices (including the eye tracker) and emulate the mouse and/or keyboard as output.